Platform Engineering

The Longer Something Doesn't Happen, the Sooner it Will

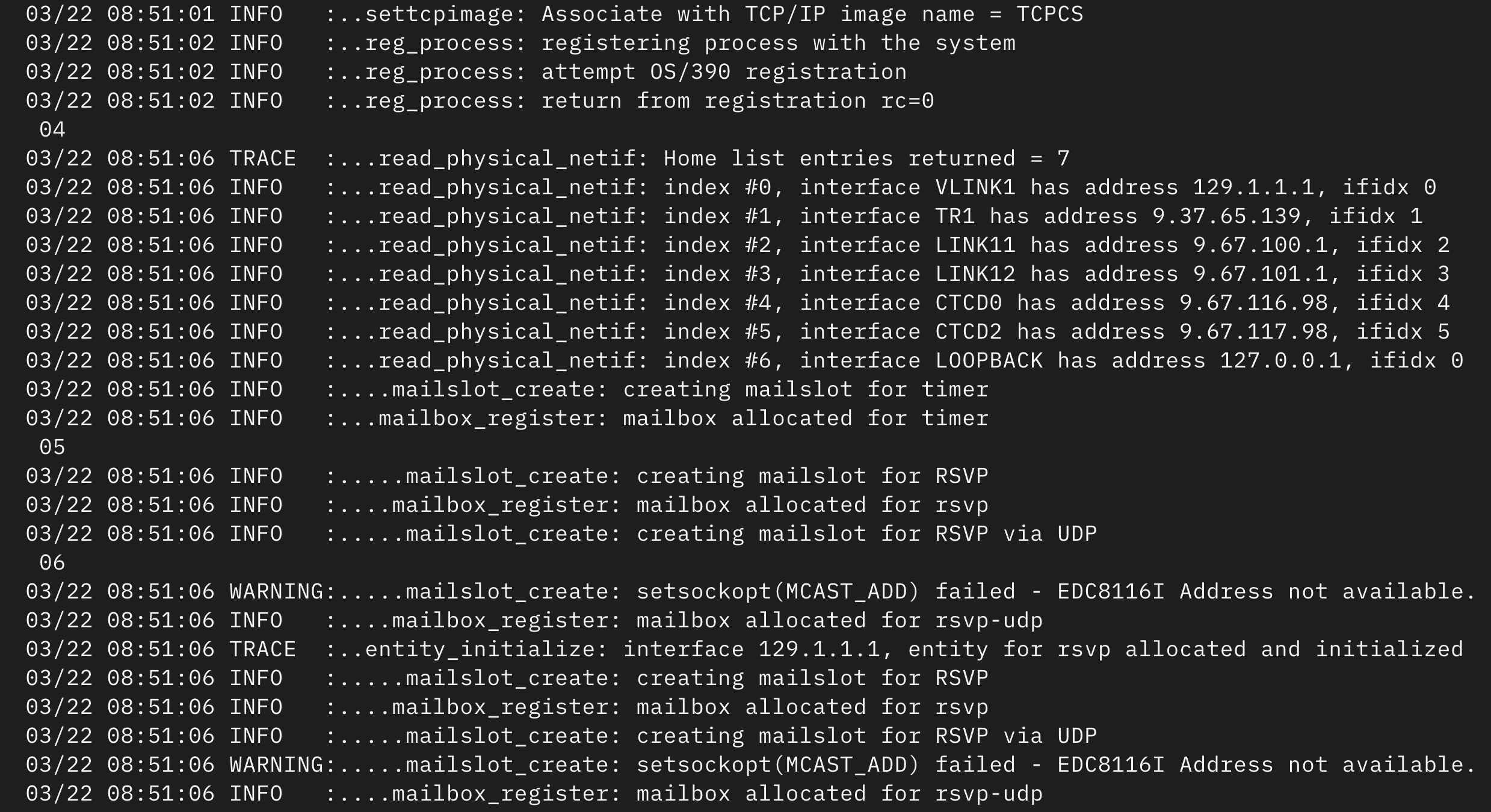

Breaking Up Log Output Using Bash

Development + #bash , #snippets & #tutorials

The Longer Something Doesn't Happen, the Sooner it Will

Platform Engineering + #devops , #sre & #chaos-engineering

Why I Built Plant Smart

Thoughts & Musings + #svelte & #projects

Learning Bash Through Pointless Fun

Development + #bash

Filtering Docker Containers with jq

Platform Engineering + #docker , #js , #json & #bash

Why Can't I Hold All These Slack Emojis?

Random Bullshit + #slack , #emoji , #bash & #api

Liberating Custom Slack Emojis

Random Bullshit + #emoji , #api & #bash

Save Money by Keeping Your AWS Account Clean

Platform Engineering + #aws , #devops , #security & #cost-optimisation